rancher-docs

Installing RKE2 cluster with ACI-CNI from Rancher UI

Table of contents

Cluster Installation

Pre-requisites

Complete the following tasks before proceeding with installation, following the referenced procedures on the Rancher and Cisco websites.

- Configure the following:

- A Cisco Application Centric Infrastructure (ACI) tenant

- An attachable entity profile (AEP)

- A VRF

- A Layer 3 outside connection (L3Out)

- A Layer 3 external network for the cluster you plan to provision

Note: The VRF and L3Out in Cisco ACI that you use to provide outside connectivity to Kubernetes external services can be in any tenant. The VRF and L3Out are usually in the common tenant or in a tenant that you dedicate to the Kubernetes cluster. You can also have separate VRFs, one for the Kubernetes bridge domains and one for the L3Out, and you can configure route leaking between them.

-

Prepare the nodes for Cisco ACI Container Network Interface (CNI) plug-in installation.

Follow the procedures in Cisco ACI and Kubernetes Integration on cisco.com until the end of the section, Preparing the Kubernetes Nodes.

Note: ACI CNI in nested mode is only supported with VMM-integrated VMware (with Distributed Virtual Switch).

-

Have a working Rancher server installation, reachable from your user cluster nodes.

-

Ensure that the version of Kubernetes that you want to upgrade to is supported for your environment. See the Cisco ACI Virtualization Compatibility Matrix and all supported versions on the Rancher website.

-

Fulfill the requirements for the nodes where you will install apps and services, including networking requirements for RKE2. See the page Node Requirements on the Rancher and RKE2 websites.

- Install acc-provision.

pip install acc-provision==<version>Note: version will be same as the ACI-CNI version you are trying to install. Full list here.

-

Generate ACI-CNI manifests using acc-provision tool with the appropriate RKE2 flavor.

To list available flavors:

acc-provision --list-flavorsThe below command uses the RKE2-kubernetes-1.27 flavor to generate the manifests and writes it to file named rke2-manifests.yaml:

acc-provision -a -c acc_provision_input.yaml -u <username> -p <password> -f RKE2-kubernetes-1.27 -o rke2-manifests.yamlNote: the

-aoption will push the config to your ACI Fabric and create the required resources.

Use-hor--helpto see all available options.Optional: If you are installing the Logging and Monitoring apps on top of your RKE2 cluster and you want to override the “cattle-logging” and “cattle-prometheus” default namespaces. Set the variables ‘logging_namespace’ and ‘monitoring_namespace’ under ‘rke2_config’ to specify the name of the namespace in which the apps are desired to be installed.

Refer Cisco APIC Container Plug-in Release Notes for details on available configuration options/features.

Installation

Follow the below procedure from the rancher UI for installation:

-

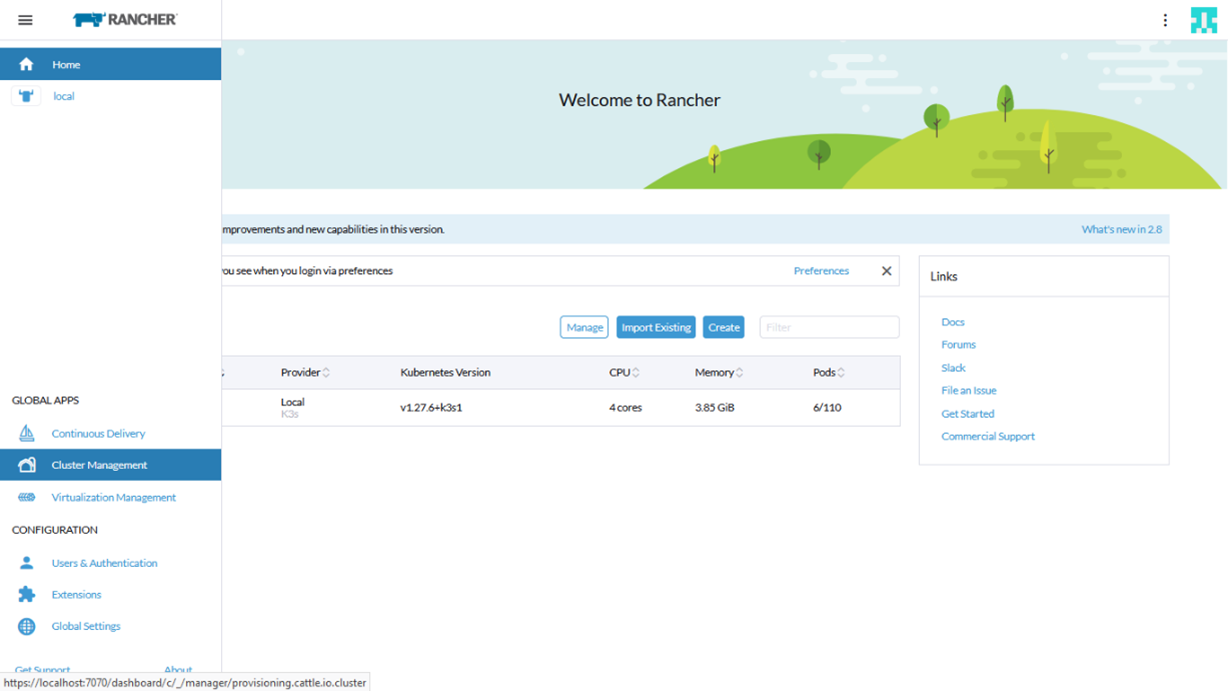

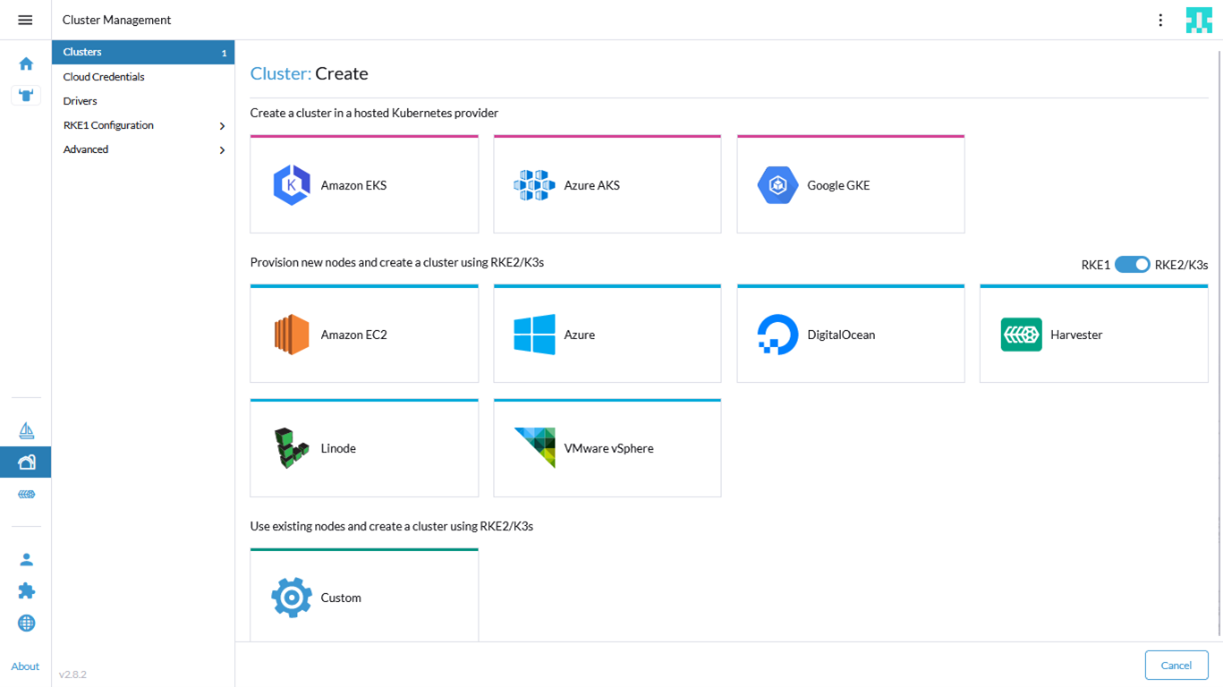

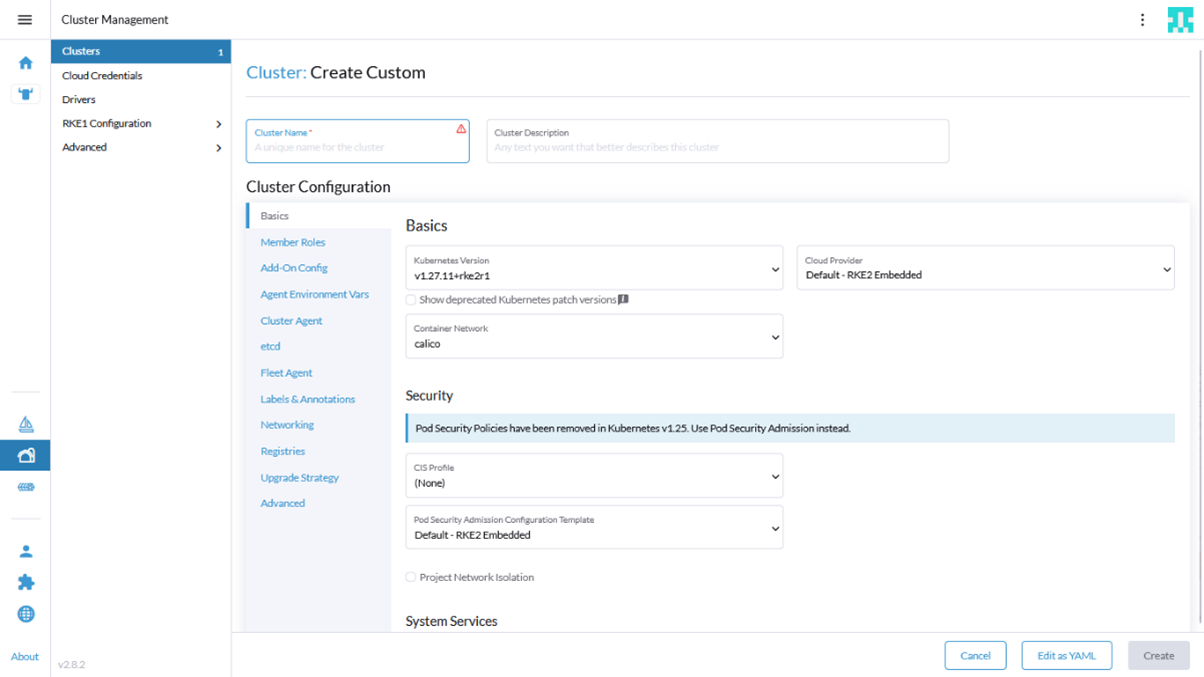

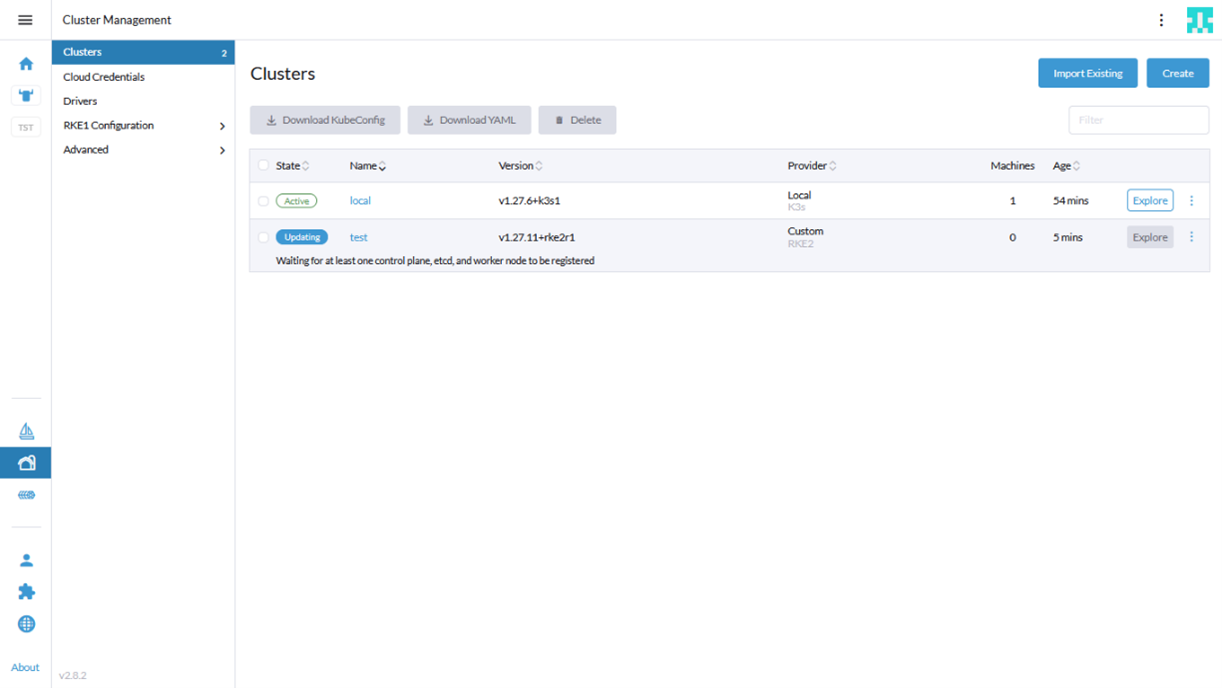

From Rancher UI, go to Cluster Management and then click on Create.

-

On the right side of the page, adjust the toggle such that RKE2/K3s option is enabled cluster option. Go on to select the custom cluster option at the bottom of the page.

-

Enter the Cluster name in the cluster configuration page and select the Kubernetes version.

-

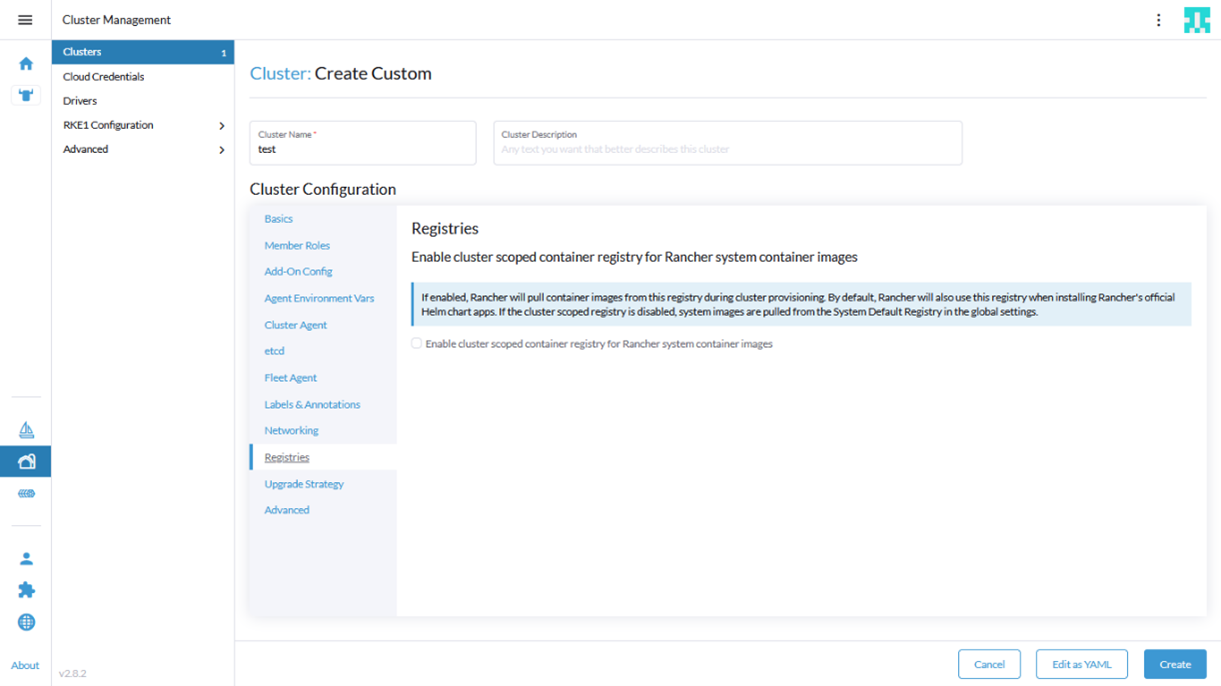

Add any private registries under the Registries section.

-

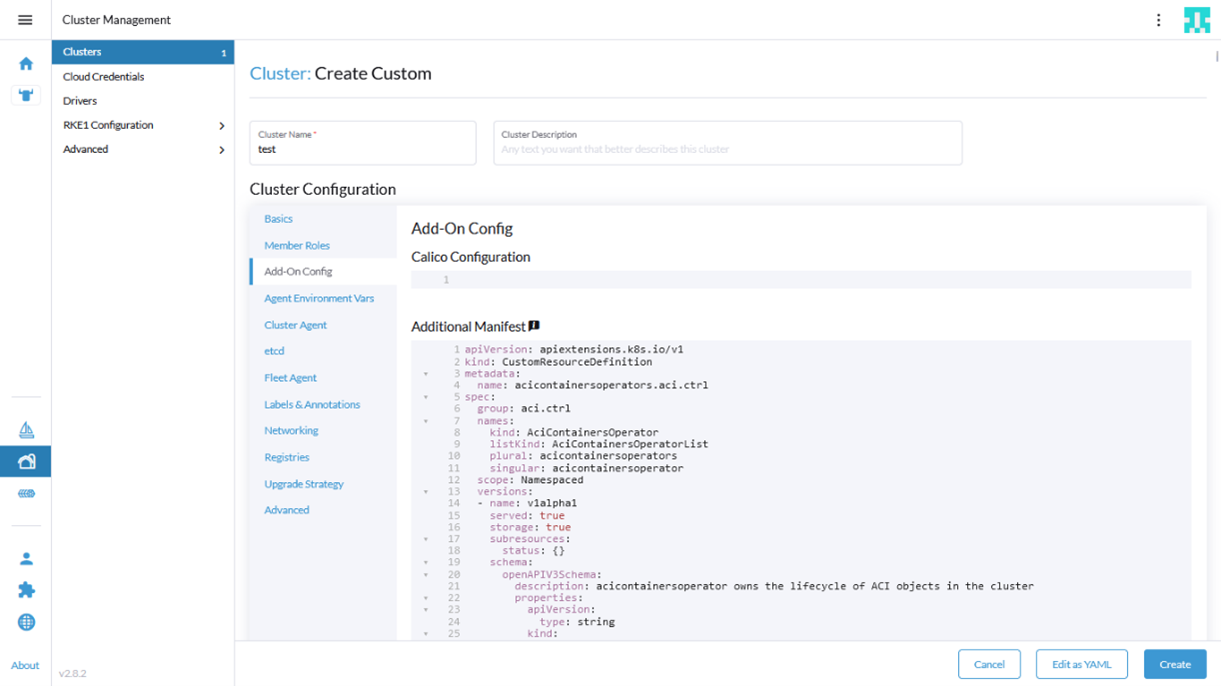

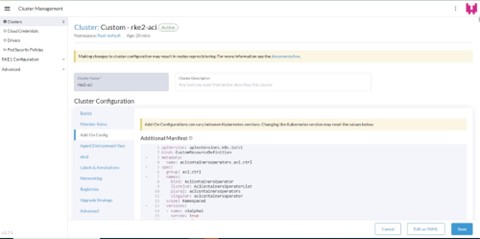

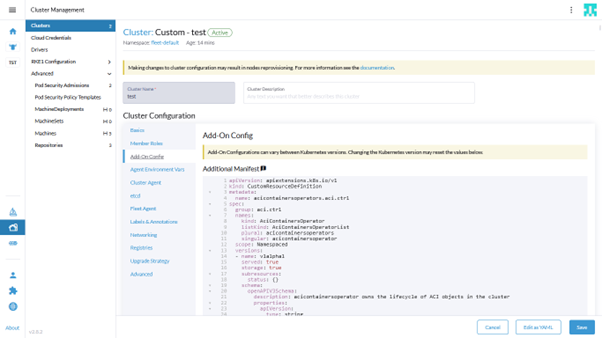

Click on Add-On Config section, copy the generated manifest (rke2-manifests.yaml from pre-requisites step 2 ) into the Additional Manifest UI text box.

-

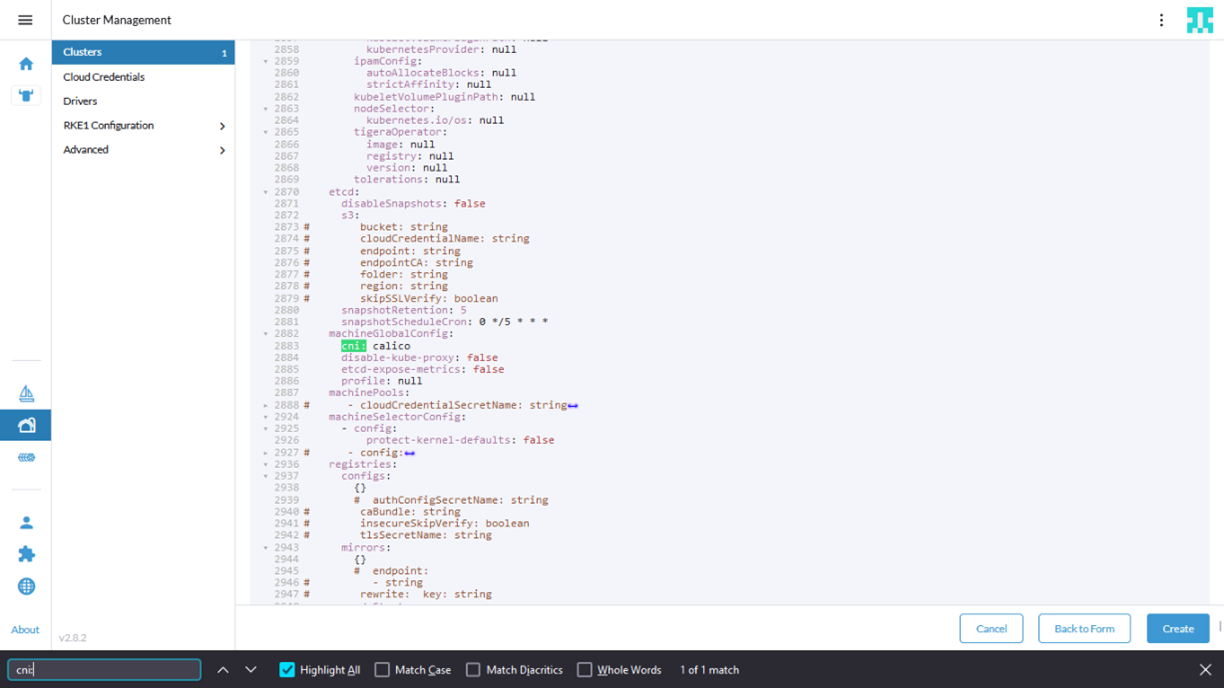

Click on ‘Edit Yaml’ option, and change the field ‘cni’ from ‘calico’ (default) to ‘none’

-

Click on ‘Create’.

-

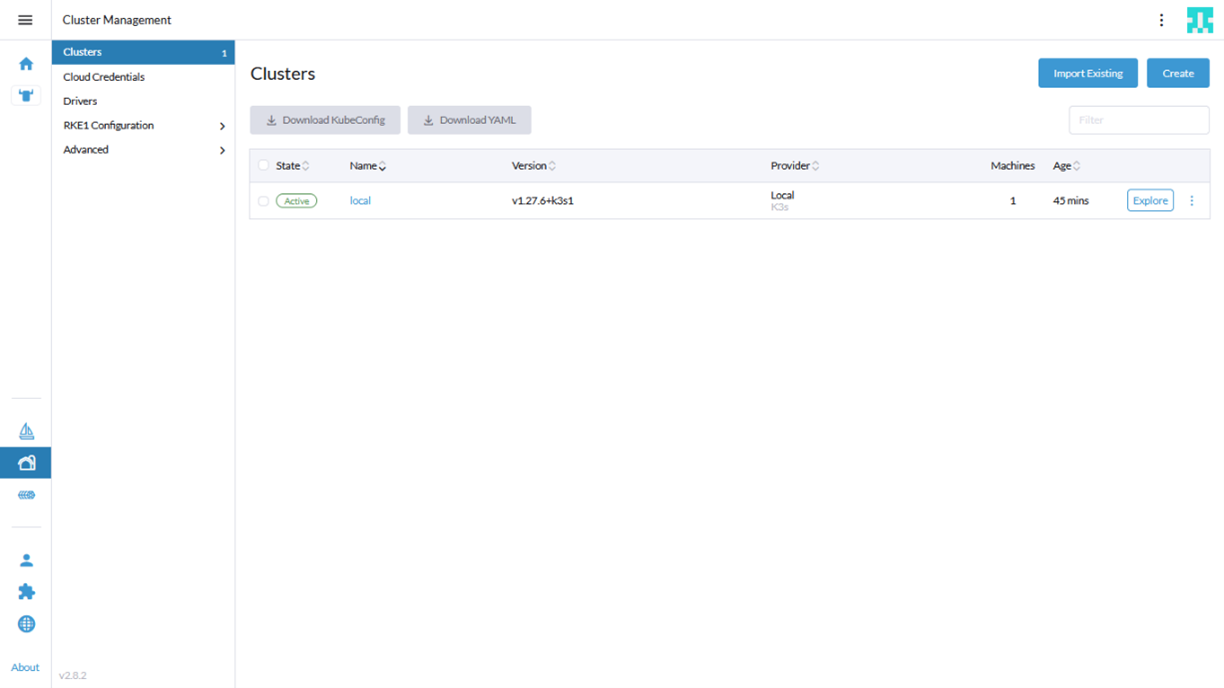

From the cluster management page, select the new cluster.

-

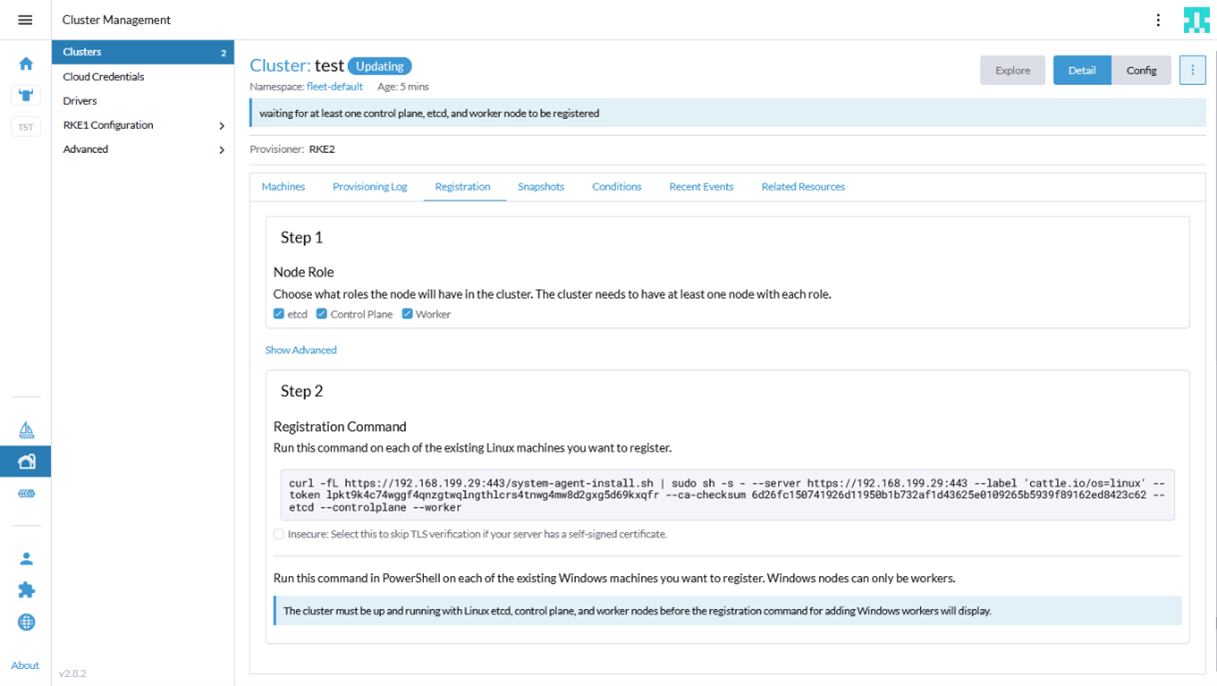

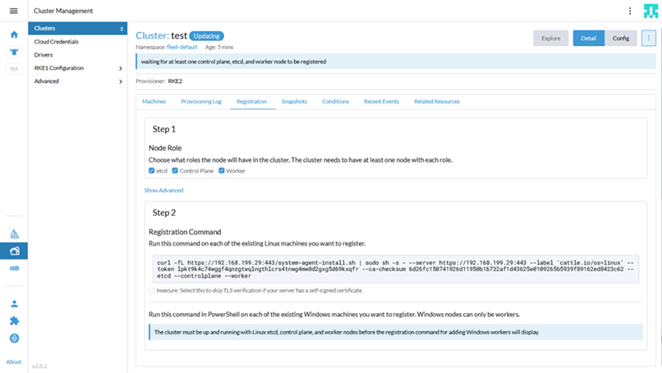

Go to Registration tab. Select the node roles and copy the command.

-

Go to the node and run the command to register the node in the cluster.

-

Repeat steps 9 & 10 to register more nodes.

-

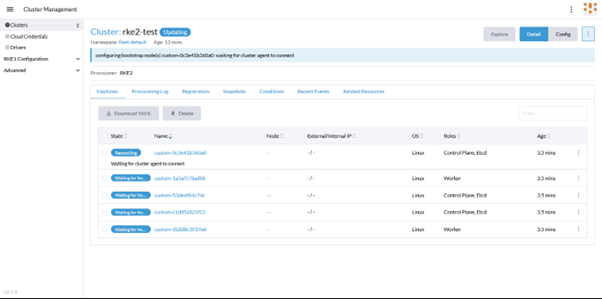

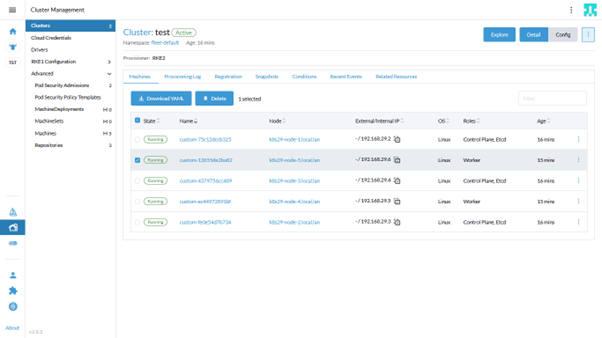

Wait for some time, a bootstrap node will be setup first followed by the rest and the cluster will go to an active state.

Cluster Operations

This assumes that a cluster exists, created following above steps.

ACI-CNI Upgrade

- Uninstall old acc-provision:

pip uninstall acc-provision - Install new release of acc-provision:

pip install acc-provision==<new_version> - Generate new ACI-CNI manifests using appropriate flavor

acc-provision --upgrade -c acc_provision_input.yaml -u <username> -p <password> -f RKE2-kubernetes-1.27 -o rke2-manifests-new.yaml - Replace the old manifests in Add-On Config section of the cluster on Rancher UI with newly generated manifests

ACI-CNI Configuration Update

-

If any variable configuration has to be added/modified/removed, change the configuration accordingly in the input file.

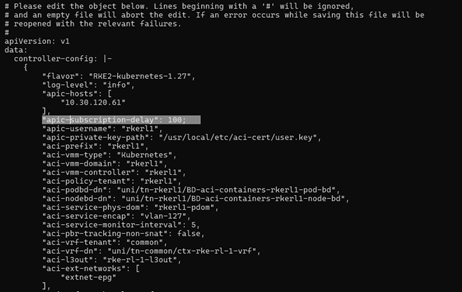

eg: add the variable ‘apic_subscription_delay: 100’ under aci_config section of acc_provision_input file.

- Generate the new manifests using acc-provision tool.

acc-provision -c acc_provision_input.yaml -u <username> -p <password> -f RKE2-kubernetes-1.27 -o rke2-manifests.yaml -

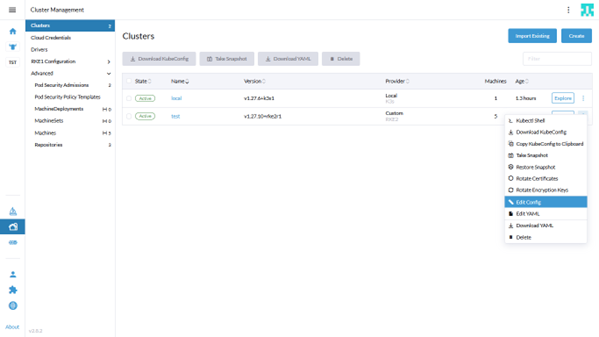

On Rancher UI, From Cluster Management Page, select the ‘Edit Config’ option for the cluster whose configuration should be modified

-

Replace the old manifests in ‘Add-on Config’ section of the cluster on Rancher UI with newly generated manifests and click on save.

-

Wait until cluster goes to active state.

We can verify the ConfigMap resources in aci-containers-system namespace to see if the variable change is reflected in them. For example, whether ‘apic_subscription_delay: 100’ is being added in aci-containers-config ConfigMap.

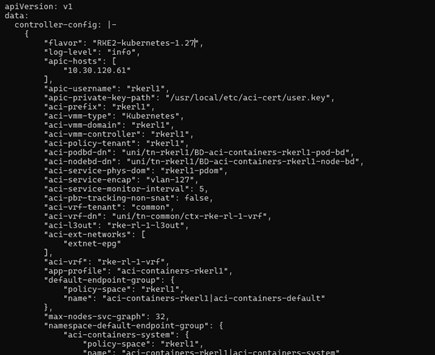

kubectl get cm -n aci-containers-system aci-containers-config -o yamlBefore Update:

After Update:

Cluster Node Add/Removal

Addition

-

Click on your cluster from the Cluster Management page, Go to ‘Registration’ tab. Select the node roles and copy the command.

-

Login to the node you want to register and run the copied command to add the node to cluster.

-

Wait for some time, the new node will go to Running state and the cluster to Active state.

Removal

-

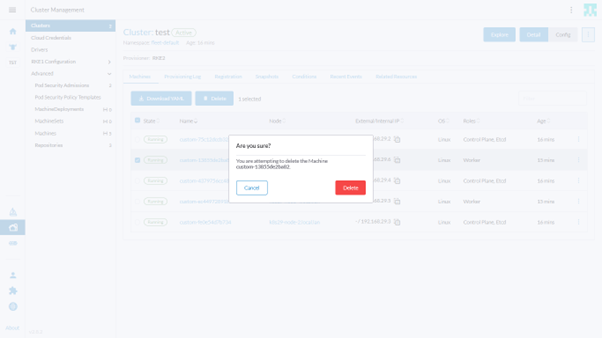

Click on your cluster from the Cluster Management page, select the node you want to remove from the cluster and click on the delete option.

-

Click Delete in the pop-up to confirm deletion.

Notes

-

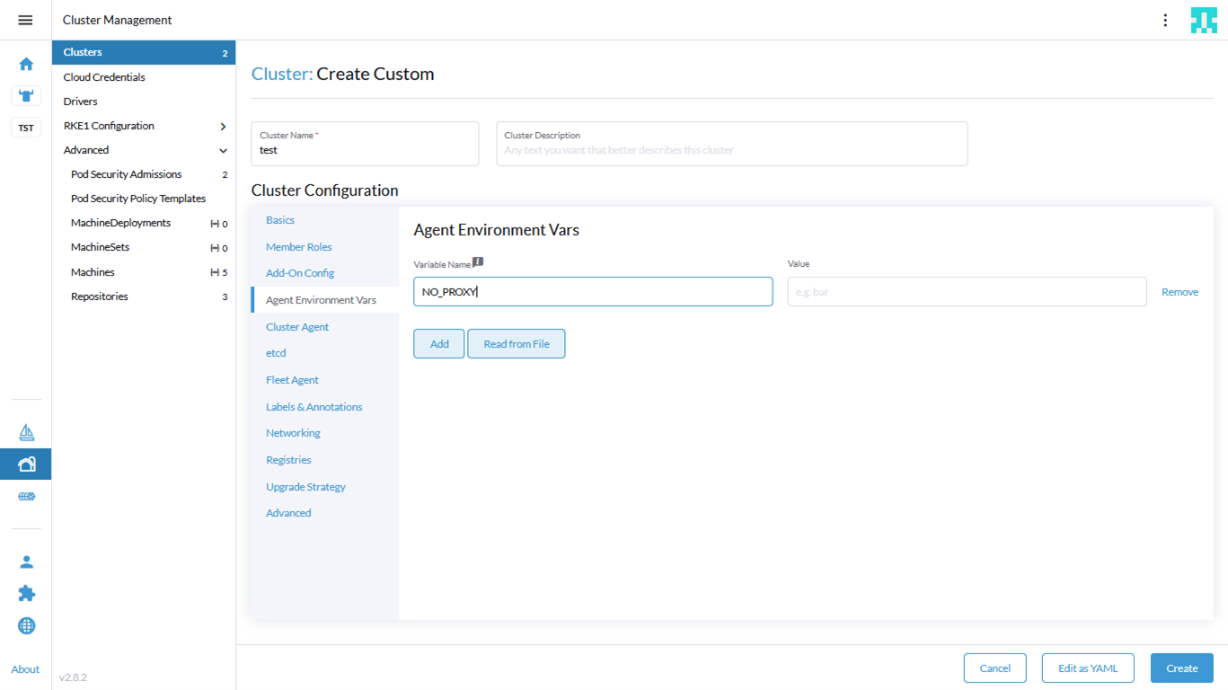

You will need to specify any additional proxy configuration in the Agent Environment Vars section while installing, if running behind proxy.